#Scrape E-Commerce Website Data

Explore tagged Tumblr posts

Text

This blog will guide you about web scraping and how you can use it to scrape e-commerce websites for the growth of your business.

For More Information:-

0 notes

Text

How to Effortlessly Scrape Product Listings from Rakuten?

Use simple steps to scrape product listings from Rakuten efficiently. Enhance your e-commerce business by accessing valuable data with web scraping techniques.

Know More : https://www.iwebdatascraping.com/effortlessly-scrape-product-listings-from-rakuten.php

#scraping Rakuten's product listings#Data Scraped from Rakuten#Scrape Product Listings From Rakuten#Rakuten data scraping services#Web Scraping Rakuten Website#Web scraping e-commerce sites

0 notes

Text

E-Commerce Data Scraping Services - E-Commerce Data Collection Services

"We offer reliable e-commerce data scraping services for product data collection from websites in multiple countries, including the USA, UK, and UAE. Contact us for complete solutions.

know more:

#E-commerce data scraping#E-Commerce Data Collection Services#Scrape e-commerce product data#Web scraping retail product price data#scrape data from e-commerce websites

0 notes

Text

The top five targeted industries are technology (Bad Bots comprise 76% of its internet traffic); gaming (29% of traffic); social media (46%), e-commerce (65%), and financial services (45%). If a bot fails in its purpose, there is a growing tendency for the criminals to switch to human operated fraud farms. Arkose estimates there were more than 3 billion fraud farm attacks in H1 2023. These fraud farms appear to be located primarily in Brazil, India, Russia, Vietnam, and the Philippines.

The growth in the prevalence of Bad Bots is likely to increase for two reasons: the arrival and general availability of artificial intelligence (primarily gen-AI), and the increasing business professionalism of the criminal underworld with new crime-as-a-service (CaaS) offerings.

From Q1 to Q2, intelligent bot traffic nearly quadrupled. “Intelligent [bots] employ sophisticated techniques like machine learning and AI to mimic human behavior and evade detection,” notes the report (PDF). “This makes them skilled at adaptation as they target vulnerabilities in IoT devices, cloud services, and other emerging technologies.” They are widely used, for example, to circumvent 2FA defense against phishing.

Separately, the rise of artificial intelligence may or may not relate to a dramatic rise in ‘scraping’ bots that gather data and images from websites. From Q1 to Q2, scraping increased by 432%. Scraping social media accounts can gather the type of personal data that can be used by gen-AI to mass produce compelling phishing attacks. Other bots could then be used to deliver account takeover emails, romance scams, and so on. Scraping also targets the travel and hospitality sectors.

More at the link.

2 notes

·

View notes

Text

Why Should You Do Web Scraping for python

Web scraping is a valuable skill for Python developers, offering numerous benefits and applications. Here’s why you should consider learning and using web scraping with Python:

1. Automate Data Collection

Web scraping allows you to automate the tedious task of manually collecting data from websites. This can save significant time and effort when dealing with large amounts of data.

2. Gain Access to Real-World Data

Most real-world data exists on websites, often in formats that are not readily available for analysis (e.g., displayed in tables or charts). Web scraping helps extract this data for use in projects like:

Data analysis

Machine learning models

Business intelligence

3. Competitive Edge in Business

Businesses often need to gather insights about:

Competitor pricing

Market trends

Customer reviews Web scraping can help automate these tasks, providing timely and actionable insights.

4. Versatility and Scalability

Python’s ecosystem offers a range of tools and libraries that make web scraping highly adaptable:

BeautifulSoup: For simple HTML parsing.

Scrapy: For building scalable scraping solutions.

Selenium: For handling dynamic, JavaScript-rendered content. This versatility allows you to scrape a wide variety of websites, from static pages to complex web applications.

5. Academic and Research Applications

Researchers can use web scraping to gather datasets from online sources, such as:

Social media platforms

News websites

Scientific publications

This facilitates research in areas like sentiment analysis, trend tracking, and bibliometric studies.

6. Enhance Your Python Skills

Learning web scraping deepens your understanding of Python and related concepts:

HTML and web structures

Data cleaning and processing

API integration

Error handling and debugging

These skills are transferable to other domains, such as data engineering and backend development.

7. Open Opportunities in Data Science

Many data science and machine learning projects require datasets that are not readily available in public repositories. Web scraping empowers you to create custom datasets tailored to specific problems.

8. Real-World Problem Solving

Web scraping enables you to solve real-world problems, such as:

Aggregating product prices for an e-commerce platform.

Monitoring stock market data in real-time.

Collecting job postings to analyze industry demand.

9. Low Barrier to Entry

Python's libraries make web scraping relatively easy to learn. Even beginners can quickly build effective scrapers, making it an excellent entry point into programming or data science.

10. Cost-Effective Data Gathering

Instead of purchasing expensive data services, web scraping allows you to gather the exact data you need at little to no cost, apart from the time and computational resources.

11. Creative Use Cases

Web scraping supports creative projects like:

Building a news aggregator.

Monitoring trends on social media.

Creating a chatbot with up-to-date information.

Caution

While web scraping offers many benefits, it’s essential to use it ethically and responsibly:

Respect websites' terms of service and robots.txt.

Avoid overloading servers with excessive requests.

Ensure compliance with data privacy laws like GDPR or CCPA.

If you'd like guidance on getting started or exploring specific use cases, let me know!

2 notes

·

View notes

Text

What is an ISP Proxy?

An ISP Proxy is a type of proxy hosted in a data center but uses residential IP addresses. Through ISP proxies, you can take advantage of the speed of data center networks while benefiting from the good reputation of residential IPs.

If ISP proxies are a combination of data center and residential proxies in some way, we might want to first define these terms.

Data Center Proxies are designed with simplicity in mind. In most cases, traffic is forwarded from the proxy client to the data center, where the provider redirects your traffic to the destination. As a result, the traffic originates from one of the IP addresses in the data center, meaning multiple users often share the same IP address. For anyone trying to block their use, this can be a red flag. The main advantages of data center proxies are speed and network stability, as data center networks are very stable and offer gigabit speeds.

Residential Proxies rely on the ability of an Internet Service Provider (ISP) to assign IP addresses tied to specific locations. In other words, you get a legitimate and unique IP address that masks your actual location.

With ISP proxies, you get access to these legitimate and unique IP ranges, and hosting them in a data center provides an additional benefit. This makes ISP proxies particularly effective, offering the benefits of both residential proxy services (such as the ability to surf the web from anywhere and the good reputation of residential IPs) and data center proxy services (such as impressive speed).

---

Why Do You Need ISP Proxies?

As you can see, ISP proxies combine the undetectable features of residential proxies with the speed of data center proxies. ISP proxy providers achieve this by working with different ISPs to provide IP addresses instead of the user's IP address. This makes them especially useful for various tasks. Let’s take a look at some of them:

1. Web Scraping

ISP proxies are the best choice for web scraping. They provide fast, undetectable connections that allow you to scrape as much data as needed.

2. Bypass Rate Limiting Displayed as Residential Connections

To prevent attacks like Denial of Service (DoS), most websites and data centers implement rate limiting. This prevents a single IP address from making too many requests or downloading too much data from a single website. The direct consequence is that if you perform web scraping, you are likely to hit these limits.

However, ISP proxies offer a way to bypass rate limits; they use ISP IP addresses to trick the rate limiter code, making it appear as if the requests are coming from a different residential location.

3. Accessing Geo-Restricted Content

Like all proxies, ISP proxies allow you to change your location, making it appear as though you are accessing the internet from another country or region.

4. Bulk Purchasing of Limited Edition Products

E-commerce websites take proactive measures to prevent bots (automated scripts) from purchasing products on their platforms. One common technique is to blacklist IP addresses associated with compulsive purchasing behavior. ISP proxies can help you bypass these restrictions.

---

A Closer Look at ISP Proxies

As mentioned earlier, ISP proxies are hosted in data centers and provide internet service provider IP addresses to the destination. Since ISPs own large pools of public IP addresses, it is nearly impossible to trace the computer using the proxy.

While the main benefit is that they are ideal for web scraping, they also provide other features such as:

- Security: End-to-end encryption, firewalls, web filtering, auditing, and analysis.

- Performance: Web caching and high-speed internet from data centers to destinations.

The uniqueness of ISP proxies is that they are the only proxies that directly deal with ISPs to provide the best anonymous connection. While data center proxies are a good option for providing speed and privacy at a lower price, ISP proxies combine the ability to offer speed and anonymity, giving them a significant advantage.

---

Use Cases

The speed and undetectability of ISP proxies make them the preferred choice for large-scale network operations such as web scraping, SEO monitoring, social media monitoring, and ad verification. Let’s take a deeper look at each use case.

1. Web Scraping

Web scraping is the process of analyzing HTML code to extract the required data from websites.

While the concept is simple, large-scale web scraping requires proxy servers to change your location because many websites display different pages based on your location Additionally, to scrape large volumes of data within a reasonable timeframe, proxies need to be extremely fast. Because of these reasons, ISP proxies are considered the best choice for web scraping.

2. SEO Monitoring

SEO monitoring involves checking the performance of your website, content, articles, etc., in search engine results. However, search engine results are influenced by your location, and the cookies attached to your web browser can affect the displayed results. To bypass these issues, proxies are needed to anonymize your connection or simulate a user from another global location.

Moreover, SEO monitoring involves frequent access to the same webpage, which could lead to your IP address being blacklisted or subjected to rate limits. Therefore, it’s best to use proxies when performing SEO monitoring.

3. Social Media Monitoring

Similar to SEO monitoring, activities in social media monitoring can raise suspicion. As a result, you are forced to comply with the host's rules to avoid being blocked or banned from the platform. For example, managing multiple accounts with the same IP address would certainly raise red flags.

In such cases, ISP proxies can be particularly useful because they fool social network algorithms about your IP address, so you won’t be blocked in the end.

4. Ad Verification

As a marketer, you may want to verify that your advertising partners are delivering on their promises and that the statistics they provide are legitimate.

Ad verification involves scanning the web to check if the ads displayed on various websites match campaign standards, appear on the right sites, and reach the target audience. Since these scans need to cover millions of websites, ISP proxies are ideal to ensure that your ad verification process runs quickly and without being blocked due to excessive use of IP addresses.

---

Conclusion

ISP proxies combine the best of both worlds by offering the speed and stability of data center proxies along with the anonymity and legitimacy of residential proxies. This makes them the perfect tool for activities that require speed, anonymity, and high-volume operations, such as web scraping, SEO monitoring, social media management, and ad verification. By working with ISPs to provide legitimate and unique IP addresses, ISP proxies help users bypass restrictions, access geo-restricted content, and operate more efficiently online.

2 notes

·

View notes

Text

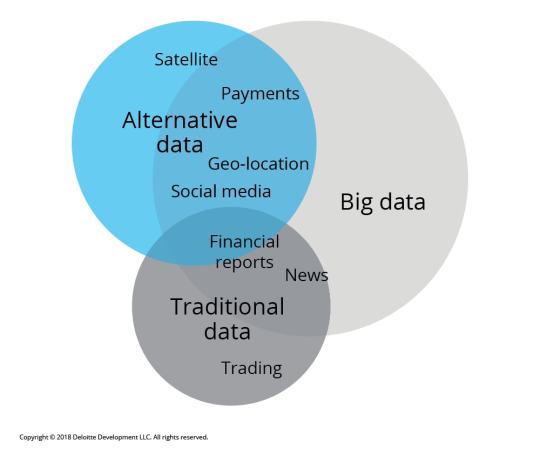

📊 Unlocking Trading Potential: The Power of Alternative Data 📊

In the fast-paced world of trading, traditional data sources—like financial statements and market reports—are no longer enough. Enter alternative data: a game-changing resource that can provide unique insights and an edge in the market. 🌐

What is Alternative Data? Alternative data refers to non-traditional data sources that can inform trading decisions. These include:

Social Media Sentiment: Analyzing trends and sentiments on platforms like Twitter and Reddit can offer insights into public perception of stocks or market movements. 📈

Satellite Imagery: Observing traffic patterns in retail store parking lots can indicate sales performance before official reports are released. 🛰️

Web Scraping: Gathering data from e-commerce websites to track product availability and pricing trends can highlight shifts in consumer behavior. 🛒

Sensor Data: Utilizing IoT devices to track activity in real-time can give traders insights into manufacturing output and supply chain efficiency. 📡

How GPT Enhances Data Analysis With tools like GPT, traders can sift through vast amounts of alternative data efficiently. Here’s how:

Natural Language Processing (NLP): GPT can analyze news articles, earnings calls, and social media posts to extract key insights and sentiment analysis. This allows traders to react swiftly to market changes.

Predictive Analytics: By training GPT on historical data and alternative data sources, traders can build models to forecast price movements and market trends. 📊

Automated Reporting: GPT can generate concise reports summarizing alternative data findings, saving traders time and enabling faster decision-making.

Why It Matters Incorporating alternative data into trading strategies can lead to more informed decisions, improved risk management, and ultimately, better returns. As the market evolves, staying ahead of the curve with innovative data strategies is essential. 🚀

Join the Conversation! What alternative data sources have you found most valuable in your trading strategy? Share your thoughts in the comments! 💬

#Trading #AlternativeData #GPT #Investing #Finance #DataAnalytics #MarketInsights

2 notes

·

View notes

Text

Must-Have Programmatic SEO Tools for Superior Rankings

Understanding Programmatic SEO

What is programmatic SEO?

Programmatic SEO uses automated tools and scripts to scale SEO efforts. In contrast to traditional SEO, where huge manual efforts were taken, programmatic SEO extracts data and uses automation for content development, on-page SEO element optimization, and large-scale link building. This is especially effective on large websites with thousands of pages, like e-commerce platforms, travel sites, and news portals.

The Power of SEO Automation

The automation within SEO tends to consume less time, with large content levels needing optimization. Using programmatic tools, therefore, makes it easier to analyze vast volumes of data, identify opportunities, and even make changes within the least period of time available. This thus keeps you ahead in the competitive SEO game and helps drive more organic traffic to your site.

Top Programmatic SEO Tools

1. Screaming Frog SEO Spider

The Screaming Frog is a multipurpose tool that crawls websites to identify SEO issues. Amongst the things it does are everything, from broken links to duplication of content and missing metadata to other on-page SEO problems within your website. Screaming Frog shortens a procedure from thousands of hours of manual work to hours of automated work.

Example: It helped an e-commerce giant fix over 10,000 broken links and increase their organic traffic by as much as 20%.

2. Ahrefs

Ahrefs is an all-in-one SEO tool that helps you understand your website performance, backlinks, and keyword research. The site audit shows technical SEO issues, whereas its keyword research and content explorer tools help one locate new content opportunities.

Example: A travel blog that used Ahrefs for sniffing out high-potential keywords and updating its existing content for those keywords grew search visibility by 30%.

3. SEMrush

SEMrush is the next well-known, full-featured SEO tool with a lot of features related to keyword research, site audit, backlink analysis, and competitor analysis. Its position tracking and content optimization tools are very helpful in programmatic SEO.

Example: A news portal leveraged SEMrush to analyze competitor strategies, thus improving their content and hoisting themselves to the first page of rankings significantly.

4. Google Data Studio

Google Data Studio allows users to build interactive dashboards from a professional and visualized perspective regarding SEO data. It is possible to integrate data from different sources like Google Analytics, Google Search Console, and third-party tools while tracking SEO performance in real-time.

Example: Google Data Studio helped a retailer stay up-to-date on all of their SEO KPIs to drive data-driven decisions that led to a 25% organic traffic improvement.

5. Python

Python, in general, is a very powerful programming language with the ability to program almost all SEO work. You can write a script in Python to scrape data, analyze huge datasets, automate content optimization, and much more.

Example: A marketing agency used Python for thousands of product meta-description automations. This saved the manual time of resources and improved search rank.

The How for Programmatic SEO

Step 1: In-Depth Site Analysis

Before diving into programmatic SEO, one has to conduct a full site audit. Such technical SEO issues, together with on-page optimization gaps and opportunities to earn backlinks, can be found with tools like Screaming Frog, Ahrefs, and SEMrush.

Step 2: Identify High-Impact Opportunities

Use the data collected to figure out the biggest bang-for-buck opportunities. Look at those pages with the potential for quite a high volume of traffic, but which are underperforming regarding the keywords focused on and content gaps that can be filled with new or updated content.

Step 3: Content Automation

This is one of the most vital parts of programmatic SEO. Scripts and tools such as the ones programmed in Python for the generation of content come quite in handy for producing significant, plentiful, and high-quality content in a short amount of time. Ensure no duplication of content, relevance, and optimization for all your target keywords.

Example: An e-commerce website generated unique product descriptions for thousands of its products with a Python script, gaining 15% more organic traffic.

Step 4: Optimize on-page elements

Tools like Screaming Frog and Ahrefs can also be leveraged to find loopholes for optimizing the on-page SEO elements. This includes meta titles, meta descriptions, headings, or even adding alt text for images. Make these changes in as effective a manner as possible.

Step 5: Build High-Quality Backlinks

Link building is one of the most vital components of SEO. Tools to be used in this regard include Ahrefs and SEMrush, which help identify opportunities for backlinks and automate outreach campaigns. Begin to acquire high-quality links from authoritative websites.

Example: A SaaS company automated its link-building outreach using SEMrush, landed some wonderful backlinks from industry-leading blogs, and considerably improved its domain authority. ### Step 6: Monitor and Analyze Performance

Regularly track your SEO performance on Google Data Studio. Analyze your data concerning your programmatic efforts and make data-driven decisions on the refinement of your strategy.

See Programmatic SEO in Action

50% Win in Organic Traffic for an E-Commerce Site

Remarkably, an e-commerce electronics website was undergoing an exercise in setting up programmatic SEO for its product pages with Python scripting to enable unique meta descriptions while fixing technical issues with the help of Screaming Frog. Within just six months, the experience had already driven a 50% rise in organic traffic.

A Travel Blog Boosts Search Visibility by 40%

Ahrefs and SEMrush were used to recognize high-potential keywords and optimize the content on their travel blog. By automating updates in content and link-building activities, it was able to set itself up to achieve 40% increased search visibility and more organic visitors.

User Engagement Improvement on a News Portal

A news portal had the option to use Google Data Studio to make some real-time dashboards to monitor their performance in SEO. Backed by insights from real-time dashboards, this helped them optimize the content strategy, leading to increased user engagement and organic traffic.

Challenges and Solutions in Programmatic SEO

Ensuring Content Quality

Quality may take a hit in the automated process of creating content. Therefore, ensure that your automated scripts can produce unique, high-quality, and relevant content. Make sure to review and fine-tune the content generation process periodically.

Handling Huge Amounts of Data

Dealing with huge amounts of data can become overwhelming. Use data visualization tools such as Google Data Studio to create dashboards that are interactive, easy to make sense of, and result in effective decision-making.

Keeping Current With Algorithm Changes

Search engine algorithms are always in a state of flux. Keep current on all the recent updates and calibrate your programmatic SEO strategies accordingly. Get ahead of the learning curve by following industry blogs, attending webinars, and taking part in SEO forums.

Future of Programmatic SEO

The future of programmatic SEO seems promising, as developing sectors in artificial intelligence and machine learning are taking this space to new heights. Developing AI-driven tools would allow much more sophisticated automation of tasks, thus making things easier and faster for marketers to optimize sites as well.

There are already AI-driven content creation tools that can make the content to be written highly relevant and engaging at scale, multiplying the potential of programmatic SEO.

Conclusion

Programmatic SEO is the next step for any digital marketer willing to scale up efforts in the competitive online landscape. The right tools and techniques put you in a position to automate key SEO tasks, thus optimizing your website for more organic traffic. The same goals can be reached more effectively and efficiently if one applies programmatic SEO to an e-commerce site, a travel blog, or even a news portal.

#Programmatic SEO#Programmatic SEO tools#SEO Tools#SEO Automation Tools#AI-Powered SEO Tools#Programmatic Content Generation#SEO Tool Integrations#AI SEO Solutions#Scalable SEO Tools#Content Automation Tools#best programmatic seo tools#programmatic seo tool#what is programmatic seo#how to do programmatic seo#seo programmatic#programmatic seo wordpress#programmatic seo guide#programmatic seo examples#learn programmatic seo#how does programmatic seo work#practical programmatic seo#programmatic seo ai

4 notes

·

View notes

Text

25 Python Projects to Supercharge Your Job Search in 2024

Introduction: In the competitive world of technology, a strong portfolio of practical projects can make all the difference in landing your dream job. As a Python enthusiast, building a diverse range of projects not only showcases your skills but also demonstrates your ability to tackle real-world challenges. In this blog post, we'll explore 25 Python projects that can help you stand out and secure that coveted position in 2024.

1. Personal Portfolio Website

Create a dynamic portfolio website that highlights your skills, projects, and resume. Showcase your creativity and design skills to make a lasting impression.

2. Blog with User Authentication

Build a fully functional blog with features like user authentication and comments. This project demonstrates your understanding of web development and security.

3. E-Commerce Site

Develop a simple online store with product listings, shopping cart functionality, and a secure checkout process. Showcase your skills in building robust web applications.

4. Predictive Modeling

Create a predictive model for a relevant field, such as stock prices, weather forecasts, or sales predictions. Showcase your data science and machine learning prowess.

5. Natural Language Processing (NLP)

Build a sentiment analysis tool or a text summarizer using NLP techniques. Highlight your skills in processing and understanding human language.

6. Image Recognition

Develop an image recognition system capable of classifying objects. Demonstrate your proficiency in computer vision and deep learning.

7. Automation Scripts

Write scripts to automate repetitive tasks, such as file organization, data cleaning, or downloading files from the internet. Showcase your ability to improve efficiency through automation.

8. Web Scraping

Create a web scraper to extract data from websites. This project highlights your skills in data extraction and manipulation.

9. Pygame-based Game

Develop a simple game using Pygame or any other Python game library. Showcase your creativity and game development skills.

10. Text-based Adventure Game

Build a text-based adventure game or a quiz application. This project demonstrates your ability to create engaging user experiences.

11. RESTful API

Create a RESTful API for a service or application using Flask or Django. Highlight your skills in API development and integration.

12. Integration with External APIs

Develop a project that interacts with external APIs, such as social media platforms or weather services. Showcase your ability to integrate diverse systems.

13. Home Automation System

Build a home automation system using IoT concepts. Demonstrate your understanding of connecting devices and creating smart environments.

14. Weather Station

Create a weather station that collects and displays data from various sensors. Showcase your skills in data acquisition and analysis.

15. Distributed Chat Application

Build a distributed chat application using a messaging protocol like MQTT. Highlight your skills in distributed systems.

16. Blockchain or Cryptocurrency Tracker

Develop a simple blockchain or a cryptocurrency tracker. Showcase your understanding of blockchain technology.

17. Open Source Contributions

Contribute to open source projects on platforms like GitHub. Demonstrate your collaboration and teamwork skills.

18. Network or Vulnerability Scanner

Build a network or vulnerability scanner to showcase your skills in cybersecurity.

19. Decentralized Application (DApp)

Create a decentralized application using a blockchain platform like Ethereum. Showcase your skills in developing applications on decentralized networks.

20. Machine Learning Model Deployment

Deploy a machine learning model as a web service using frameworks like Flask or FastAPI. Demonstrate your skills in model deployment and integration.

21. Financial Calculator

Build a financial calculator that incorporates relevant mathematical and financial concepts. Showcase your ability to create practical tools.

22. Command-Line Tools

Develop command-line tools for tasks like file manipulation, data processing, or system monitoring. Highlight your skills in creating efficient and user-friendly command-line applications.

23. IoT-Based Health Monitoring System

Create an IoT-based health monitoring system that collects and analyzes health-related data. Showcase your ability to work on projects with social impact.

24. Facial Recognition System

Build a facial recognition system using Python and computer vision libraries. Showcase your skills in biometric technology.

25. Social Media Dashboard

Develop a social media dashboard that aggregates and displays data from various platforms. Highlight your skills in data visualization and integration.

Conclusion: As you embark on your job search in 2024, remember that a well-rounded portfolio is key to showcasing your skills and standing out from the crowd. These 25 Python projects cover a diverse range of domains, allowing you to tailor your portfolio to match your interests and the specific requirements of your dream job.

If you want to know more, Click here:https://analyticsjobs.in/question/what-are-the-best-python-projects-to-land-a-great-job-in-2024/

#python projects#top python projects#best python projects#analytics jobs#python#coding#programming#machine learning

2 notes

·

View notes

Text

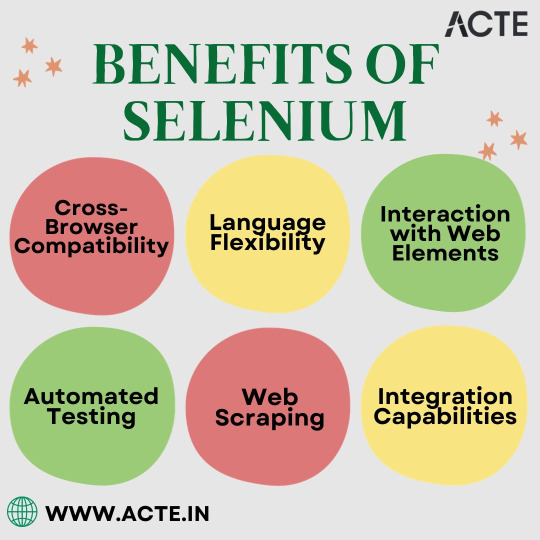

Why Choose Selenium? Exploring the Key Benefits for Testing and Development

In today's digitally driven world, where speed, accuracy, and efficiency are paramount, web automation has emerged as the cornerstone of success for developers, testers, and organizations. At the heart of this automation revolution stands Selenium, an open-source framework that has redefined how we interact with web browsers. In this comprehensive exploration, we embark on a journey to uncover the multitude of advantages that Selenium offers and how it empowers individuals and businesses in the digital age.

The Selenium Advantage: A Closer Look

Selenium, often regarded as the crown jewel of web automation, offers a variety of advantages that play important roles in simplifying complex web tasks and elevating web application development and testing processes. Let's explore the main advantages in more detail:

1. Cross-Browser Compatibility: Bridging Browser Gaps

Selenium's remarkable ability to support various web browsers, including but not limited to Chrome, Firefox, Edge, and more, ensures that web applications function consistently across different platforms. This cross-browser compatibility is invaluable in a world where users access websites from a a variety of devices and browsers. Whether it's a responsive e-commerce site or a mission-critical enterprise web app, Selenium bridges the browser gaps seamlessly.

2. Language Flexibility: Your Language of Choice

One of Selenium's standout features is its language flexibility. It doesn't impose restrictions on developers, allowing them to harness the power of Selenium using their preferred programming language. Whether you're proficient in Java, Python, C#, Ruby, or another language, Selenium welcomes your expertise with open arms. This language flexibility fosters inclusivity among developers and testers, ensuring that Selenium adapts to your preferred coding language.

3. Interaction with Web Elements: User-like Precision

Selenium empowers you to interact with web elements with pinpoint precision, mimicking human user actions effortlessly. It can click buttons, fill in forms, navigate dropdown menus, scroll through pages, and simulate a wide range of user interactions with the utmost accuracy. This level of precision is critical for automating complex web tasks, ensuring that the actions performed by Selenium are indistinguishable from those executed by a human user.

4. Automated Testing: Quality Assurance Simplified

Quality assurance professionals and testers rely heavily on Selenium for automated testing of web applications. By identifying issues, regressions, and functional problems early in the development process, Selenium streamlines the testing phase, reducing both the time and effort required for comprehensive testing. Automated testing with Selenium not only enhances the efficiency of the testing process but also improves the overall quality of web applications.

5. Web Scraping: Unleashing Data Insights

In an era where data reigns supreme, Selenium emerges as a effective tool for web scraping tasks. It enables you to extract data from websites, scrape valuable information, and store it for analysis, reporting, or integration into other applications. This capability is particularly valuable for businesses and organizations seeking to leverage web data for informed decision-making. Whether it's gathering pricing data for competitive analysis or extracting news articles for sentiment analysis, Selenium's web scraping capabilities are invaluable.

6. Integration Capabilities: The Glue in Your Tech Stack

Selenium's harmonious integration with a wide range of testing frameworks, continuous integration (CI) tools, and other technologies makes it the glue that binds your tech stack together seamlessly. This integration facilitates the orchestration of automated tests, ensuring that they fit seamlessly into your development workflow. Selenium's compatibility with popular CI/CD (Continuous Integration/Continuous Deployment) platforms like Jenkins, Travis CI, and CircleCI streamlines the testing and validation processes, making Selenium an indispensable component of the software development lifecycle.

In conclusion, the advantages of using Selenium in web automation are substantial, and they significantly contribute to efficient web development, testing, and data extraction processes. Whether you're a seasoned developer looking to streamline your web applications or a cautious tester aiming to enhance the quality of your products, Selenium stands as a versatile tool that can cater to your diverse needs.

At ACTE Technologies, we understand the key role that Selenium plays in the ever-evolving tech landscape. We offer comprehensive training programs designed to empower individuals and organizations with the knowledge and skills needed to harness the power of Selenium effectively. Our commitment to continuous learning and professional growth ensures that you stay at the forefront of technology trends, equipping you with the tools needed to excel in a rapidly evolving digital world.

So, whether you're looking to upskill, advance your career, or simply stay competitive in the tech industry, ACTE Technologies is your trusted partner on this transformative journey. Join us and unlock a world of possibilities in the dynamic realm of technology. Your success story begins here, where Selenium's advantages meet your aspirations for excellence and innovation.

5 notes

·

View notes

Text

7 Ways to Use Web Scraping for E-Commerce Growth

In the fast-paced world of e-commerce, businesses are constantly seeking innovative strategies to gain a competitive edge. One such strategy that has gained significant traction in recent years is web scraping. Web scraping involves extracting data from websites to gather valuable insights and leverage them for business growth. For e-commerce businesses, web scraping offers a multitude of opportunities to optimize operations, enhance customer experiences, and drive sales. Here are seven ways in which e-commerce businesses can harness the power of web scraping for sustainable growth:

Price Monitoring and Competitive Analysis: One of the most common applications of web scraping in e-commerce is price monitoring and competitive analysis. By scraping product prices from competitors' websites, businesses can gain real-time insights into market trends and adjust their pricing strategies accordingly. This allows them to stay competitive and maximize profit margins without manually monitoring prices across multiple platforms.

Product Catalog Enrichment: Maintaining a comprehensive and up-to-date product catalog is essential for e-commerce success. Web scraping can automate the process of gathering product information, including descriptions, images, specifications, and customer reviews, from various sources such as manufacturer websites, online marketplaces, and social media platforms. This enriched product catalog not only enhances the browsing experience for customers but also facilitates better search engine optimization (SEO) by ensuring that product pages are filled with relevant content.

Inventory Management: Effective inventory management is crucial for preventing stockouts and minimizing excess inventory costs. Web scraping can help e-commerce businesses keep track of product availability and stock levels across multiple suppliers' websites in real-time. By automating inventory updates, businesses can optimize their procurement processes, streamline order fulfillment, and minimize the risk of overselling or understocking.

Market Research and Trend Analysis: Understanding market trends and consumer preferences is vital for making informed business decisions. Web scraping enables e-commerce businesses to collect vast amounts of data from online forums, social media platforms, and review websites to identify emerging trends, monitor customer sentiment, and gauge competitors' strategies. This valuable market intelligence can inform product development, marketing campaigns, and merchandising efforts, ensuring that businesses stay ahead of the curve.

Dynamic Pricing and Personalized Promotions: Web scraping empowers e-commerce businesses to implement dynamic pricing strategies and personalized promotions based on real-time market data and customer behavior. By monitoring competitors' pricing changes, demand fluctuations, and customer segmentation, businesses can dynamically adjust prices and offer targeted discounts or incentives to maximize sales and customer satisfaction.

Lead Generation and Customer Acquisition: Web scraping can be utilized to generate leads and identify potential customers by extracting contact information from relevant websites, forums, and social media platforms. By building targeted prospect lists, businesses can launch personalized email campaigns, social media advertisements, and retargeting initiatives to engage with potential customers and drive traffic to their e-commerce platforms.

Fraud Detection and Risk Management: In an increasingly digital marketplace, e-commerce businesses face the constant threat of fraudulent activities such as fake reviews, price gouging, and account takeovers. Web scraping can help businesses detect and prevent fraud by monitoring online channels for suspicious activities, identifying counterfeit products, and analyzing patterns of fraudulent behavior. By implementing robust fraud detection mechanisms, businesses can safeguard their reputation, protect their customers, and minimize financial losses.

In conclusion, web scraping presents e-commerce businesses with a wealth of opportunities to optimize operations, enhance customer experiences, and drive sustainable growth. By leveraging web scraping techniques for price monitoring, product catalog enrichment, inventory management, market research, dynamic pricing, lead generation, and fraud detection, businesses can gain a competitive edge in today's dynamic marketplace. However, it's essential for businesses to approach web scraping ethically and responsibly, respecting the terms of service and privacy policies of the websites they scrape and ensuring compliance with relevant data protection regulations. With careful planning and implementation, web scraping can be a powerful tool for e-commerce growth in the digital age.

0 notes

Text

Impact of AI on Web Scraping Practices

Introduction

Owing to advancements in artificial intelligence (AI), the history of web scraping is a story of evolution towards efficiency in recent times. With an increasing number of enterprises and researchers relying on data extraction in deriving insights and making decisions, AI-enabled web scraping methods have transformed some of the traditional techniques into newer methods that are more efficient, more scalable, and more resistant to anti-scraping measures.

This blog discusses the effects of AI on web scraping, how AI-powered automation is changing the web scraping industry, the challenges being faced, and, ultimately, the road ahead for web scraping with AI.

How AI is Transforming Web Scraping

1. Enhanced Data Extraction Efficiency

Standard methods of scraping websites and information are rule-based extraction and rely on the script that anybody has created for that particular site, and it is hard-coded for that site and set of extraction rules. But in the case of web scraping using AI, such complexities are avoided, wherein the adaptation of the script happens automatically with a change in the structure of the websites, thus ensuring the same data extraction without rewriting the script constantly.

2. AI-Powered Web Crawlers

Machine learning algorithms enable web crawlers to mimic human browsing behavior, reducing the risk of detection. These AI-driven crawlers can:

Identify patterns in website layouts.

Adapt to dynamic content.

Handle complex JavaScript-rendered pages with ease.

3. Natural Language Processing (NLP) for Data Structuring

NLP helps in:

Extracting meaningful insights from unstructured text.

Categorizing and classifying data based on context.

Understanding sentiment and contextual relevance in customer reviews and news articles.

4. Automated CAPTCHA Solving

Many websites use CAPTCHAs to block bots. AI models, especially deep learning-based Optical Character Recognition (OCR) techniques, help bypass these challenges by simulating human-like responses.

5. AI in Anti-Detection Mechanisms

AI-powered web scraping integrates:

User-agent rotation to simulate diverse browsing behaviors.

IP Rotation & Proxies to prevent blocking.

Headless Browsers & Human-Like Interaction for bypassing bot detection.

Applications of AI in Web Scraping

1. E-Commerce Price Monitoring

AI scrapers help businesses track competitors' pricing, stock availability, and discounts in real-time, enabling dynamic pricing strategies.

2. Financial & Market Intelligence

AI-powered web scraping extracts financial reports, news articles, and stock market data for predictive analytics and trend forecasting.

3. Lead Generation & Business Intelligence

Automating the collection of business contact details, customer feedback, and sales leads through AI-driven scraping solutions.

4. Social Media & Sentiment Analysis

Extracting social media conversations, hashtags, and sentiment trends to analyze brand reputation and customer perception.

5. Healthcare & Pharmaceutical Data Extraction

AI scrapers retrieve medical research, drug prices, and clinical trial data, aiding healthcare professionals in decision-making.

Challenges in AI-Based Web Scraping

1. Advanced Anti-Scraping Technologies

Websites employ sophisticated detection methods, including fingerprinting and behavioral analysis.

AI mitigates these by mimicking real user interactions.

2. Data Privacy & Legal Considerations

Compliance with data regulations like GDPR and CCPA is essential.

Ethical web scraping practices ensure responsible data usage.

3. High Computational Costs

AI-based web scrapers require GPU-intensive resources, leading to higher operational costs.

Optimization techniques, such as cloud-based scraping, help reduce costs.

Future Trends in AI for Web Scraping

1. AI-Driven Adaptive Scrapers

Scrapers that self-learn and adjust to new website structures without human intervention.

2. Integration with Machine Learning Pipelines

Combining AI scrapers with data analytics tools for real-time insights.

3. AI-Powered Data Anonymization

Protecting user privacy by automating data masking and filtering.

4. Blockchain-Based Data Validation

Ensuring authenticity and reliability of extracted data using blockchain verification.

Conclusion

The addition of AI to the web scrape has made it smarter, flexible, and scalable as far as data extraction is concerned. The use of AIs for web scraping will help organizations navigate through anti-bot mechanisms, dynamic changes in websites, and unstructured data processing. Indeed, in the future, web scraping with AI will only be enhanced and more advanced to contribute further innovations in sectors across industries.

For organizations willing to embrace the power of data extraction with AI, CrawlXpert brings you state-of-the-art solutions designed for the present-day web scraping task. Get working with CrawlXpert right now in order to gain from AI-enabled quality automated web scraping solutions!

Know More : https://www.crawlxpert.com/blog/ai-on-web-scraping-practices

0 notes

Text

HomeDepot.com Product Data Extraction

HomeDepot.com Product Data Extraction: Unlocking Insights for E-commerce & Market Analysis. In today’s competitive retail landscape, businesses need accurate and updated product data to stay ahead. Whether you’re an e-commerce store, price comparison website, market researcher, or manufacturer, extracting product data from HomeDepot.com can provide valuable insights for strategic decision-making. DataScrapingServices.com specializes in HomeDepot.com Product Data Extraction, enabling businesses to access real-time product information for price monitoring, competitor analysis, and inventory optimization.

Why Extract Product Data from HomeDepot.com?

HomeDepot.com is a leading online marketplace for home improvement products, appliances, furniture, and tools. Manually tracking and updating product information is inefficient and prone to errors. Automated data extraction ensures you have structured, real-time data to make informed business decisions.

Key Data Fields Extracted from HomeDepot.com

By scraping HomeDepot.com, we extract a wide range of valuable product details, including:

- Product Name

- Product Category

- Brand Name

- SKU (Stock Keeping Unit)

- Product Description

- Price & Discounts

- Stock Availability

- Customer Ratings & Reviews

- Product Images & URLs

- Shipping & Delivery Details

Having access to this structured data allows businesses to analyze trends, optimize pricing strategies, and enhance their product offerings.

Benefits of HomeDepot.com Product Data Extraction

1. Competitor Price Monitoring

Tracking real-time product prices from HomeDepot.com helps businesses adjust their pricing strategies to stay competitive. Retailers can compare prices, identify discount trends, and optimize their pricing models to attract more customers.

2. E-commerce Product Enrichment

For e-commerce platforms, high-quality product data is essential for creating detailed and engaging product listings. Extracting accurate descriptions, specifications, and images improves customer experience and conversion rates.

3. Market & Trend Analysis

Businesses can analyze product availability, customer ratings, and sales trends to understand consumer preferences. This data helps retailers and manufacturers identify best-selling products, track demand fluctuations, and make informed inventory decisions.

4. Stock Availability & Inventory Management

Monitoring stock levels on HomeDepot.com helps businesses avoid supply chain disruptions. Retailers can use this data to anticipate demand, manage stock efficiently, and prevent product shortages or overstocking.

5. Enhanced Digital Marketing Strategies

With updated product data, businesses can create effective digital marketing campaigns by targeting the right audience with the right products. Dynamic pricing models and real-time product promotions become easier with automated data extraction.

6. Data-Driven Decision Making

From benchmarking product prices to analyzing customer reviews and ratings, extracted data helps businesses optimize their product strategies and improve customer satisfaction.

Best eCommerce Data Scraping Services Provider

G2 Business Directory Scraping

Kogan Product Details Extraction

Nordstrom Price Scraping Services

Target.com Price Data Extraction

Capterra Reviews Data Extraction

PriceGrabber Product Information Extraction

Lowe's Product Pricing Scraping

Wayfair Product Details Extraction

Homedepot Product Pricing Data Scraping

Product Reviews Data Extraction

Conclusion

Extracting product data from HomeDepot.com is a game-changer for e-commerce businesses, market researchers, and retailers looking to stay competitive, optimize pricing, and improve inventory management. At DataScrapingServices.com, we provide reliable, scalable, and customized data extraction solutions to help businesses make data-driven decisions.

For HomeDepot.com Product Data Extraction Services, contact [email protected] or visit DataScrapingServices.com today!

#homedepotproductdataextraction#extractingproductdetailsfromhomedepot#ecommerceproductscraping#productpricesscraping#leadgeneration#datadrivenmarketing#webscrapingservices#businessinsights#digitalgrowth#datascrapingexperts

0 notes

Text

Top 7 Use Cases of Web Scraping in E-commerce

In the fast-paced world of online retail, data is more than just numbers; it's a powerful asset that fuels smarter decisions and competitive growth. With thousands of products, fluctuating prices, evolving customer behaviors, and intense competition, having access to real-time, accurate data is essential. This is where internet scraping comes in.

Internet scraping (also known as web scraping) is the process of automatically extracting data from websites. In the e-commerce industry, it enables businesses to collect actionable insights to optimize product listings, monitor prices, analyze trends, and much more.

In this blog, we’ll explore the top 7 use cases of internet scraping, detailing how each works, their benefits, and why more companies are investing in scraping solutions for growth and competitive advantage.

What is Internet Scraping?

Internet scraping is the process of using bots or scripts to collect data from web pages. This includes prices, product descriptions, reviews, inventory status, and other structured or unstructured data from various websites. Scraping can be used once or scheduled periodically to ensure continuous monitoring. It’s important to adhere to data guidelines, terms of service, and ethical practices. Tools and platforms like TagX ensure compliance and efficiency while delivering high-quality data.

In e-commerce, this practice becomes essential for businesses aiming to stay agile in a saturated and highly competitive market. Instead of manually gathering data, which is time-consuming and prone to errors, internet scraping automates this process and provides scalable, consistent insights at scale.

Before diving into the specific use cases, it's important to understand why so many successful e-commerce companies rely on internet scraping. From competitive pricing to customer satisfaction, scraping empowers businesses to make informed decisions quickly and stay one step ahead in the fast-paced digital landscape.

Below are the top 7 Use cases of internet scraping.

1. Price Monitoring

Online retailers scrape competitor sites to monitor prices in real-time, enabling dynamic pricing strategies and maintaining competitiveness. This allows brands to react quickly to price changes.

How It Works

It is programmed to extract pricing details for identical or similar SKUs across competitor sites. The data is compared to your product catalog, and dashboards or alerts are generated to notify you of changes. The scraper checks prices across various time intervals, such as hourly, daily, or weekly, depending on the market's volatility. This ensures businesses remain up-to-date with any price fluctuations that could impact their sales or profit margins.

Benefits of Price Monitoring

Competitive edge in pricing

Avoids underpricing or overpricing

Enhances profit margins while remaining attractive to customers

Helps with automatic repricing tools

Allows better seasonal pricing strategies

2. Product Catalog Optimization

Scraping competitor and marketplace listings helps optimize your product catalog by identifying missing information, keyword trends, or layout strategies that convert better.

How It Works

Scrapers collect product titles, images, descriptions, tags, and feature lists. The data is analyzed to identify gaps and opportunities in your listings. AI-driven catalog optimization tools use this scraped data to recommend ideal product titles, meta tags, and visual placements. Combining this with A/B testing can significantly improve your conversion rates.

Benefits

Better product visibility

Enhanced user experience and conversion rates

Identifies underperforming listings

Helps curate high-performing metadata templates

3. Competitor Analysis

Internet scraping provides detailed insights into your competitors’ strategies, such as pricing, promotions, product launches, and customer feedback, helping to shape your business approach.

How It Works

Scraped data from competitor websites and social platforms is organized and visualized for comparison. It includes pricing, stock levels, and promotional tactics. You can monitor their advertising frequency, ad types, pricing structure, customer engagement strategies, and feedback patterns. This creates a 360-degree understanding of what works in your industry.

Benefits

Uncover competitive trends

Benchmark product performance

Inform marketing and product strategy

Identify gaps in your offerings

Respond quickly to new product launches

4. Customer Sentiment Analysis

By scraping reviews and ratings from marketplaces and product pages, businesses can evaluate customer sentiment, discover pain points, and improve service quality.

How It Works

Natural language processing (NLP) is applied to scraped review content. Positive, negative, and neutral sentiments are categorized, and common themes are highlighted. Text analysis on these reviews helps detect not just satisfaction levels but also recurring quality issues or logistics complaints. This can guide product improvements and operational refinements.

Benefits

Improve product and customer experience

Monitor brand reputation

Address negative feedback proactively

Build trust and transparency

Adapt to changing customer preferences

5. Inventory and Availability Tracking

Track your competitors' stock levels and restocking schedules to predict demand and plan your inventory efficiently.

How It Works

Scrapers monitor product availability indicators (like "In Stock", "Out of Stock") and gather timestamps to track restocking frequency. This enables brands to respond quickly to opportunities when competitors go out of stock. It also supports real-time alerts for critical stock thresholds.

Benefits

Avoid overstocking or stockouts

Align promotions with competitor shortages

Streamline supply chain decisions

Improve vendor negotiation strategies

Forecast demand more accurately

6. Market Trend Identification

Scraping data from marketplaces and social commerce platforms helps identify trending products, search terms, and buyer behaviors.

How It Works

Scraped data from platforms like Amazon, eBay, or Etsy is analyzed for keyword frequency, popularity scores, and rising product categories. Trends can also be extracted from user-generated content and influencer reviews, giving your brand insights before a product goes mainstream.

Benefits

Stay ahead of consumer demand

Launch timely product lines

Align campaigns with seasonal or viral trends

Prevent dead inventory

Invest confidently in new product development

7. Lead Generation and Business Intelligence

Gather contact details, seller profiles, or niche market data from directories and B2B marketplaces to fuel outreach campaigns and business development.

How It Works

Scrapers extract publicly available email IDs, company names, product listings, and seller ratings. The data is filtered based on industry and size. Lead qualification becomes faster when you pre-analyze industry relevance, product categories, or market presence through scraped metadata.

Benefits

Expand B2B networks

Targeted marketing efforts

Increase qualified leads and partnerships

Boost outreach accuracy

Customize proposals based on scraped insights

How Does Internet Scraping Work in E-commerce?

Target Identification: Identify the websites and data types you want to scrape, such as pricing, product details, or reviews.

Bot Development: Create or configure a scraper bot using tools like Python, BeautifulSoup, or Scrapy, or use advanced scraping platforms like TagX.

Data Extraction: Bots navigate web pages, extract required data fields, and store them in structured formats (CSV, JSON, etc.).

Data Cleaning: Filter, de-duplicate, and normalize scraped data for analysis.

Data Analysis: Feed clean data into dashboards, CRMs, or analytics platforms for decision-making.

Automation and Scheduling: Set scraping frequency based on how dynamic the target sites are.

Integration: Sync data with internal tools like ERP, inventory systems, or marketing automation platforms.

Key Benefits of Internet Scraping for E-commerce

Scalable Insights: Access large volumes of data from multiple sources in real time

Improved Decision Making: Real-time data fuels smarter, faster decisions

Cost Efficiency: Reduces the need for manual research and data entry

Strategic Advantage: Gives brands an edge over slower-moving competitors

Enhanced Customer Experience: Drives better content, service, and personalization

Automation: Reduces human effort and speeds up analysis

Personalization: Tailor offers and messaging based on real-world competitor and customer data

Why Businesses Trust TagX for Internet Scraping

TagX offers enterprise-grade, customizable internet scraping solutions specifically designed for e-commerce businesses. With compliance-first approaches and powerful automation, TagX transforms raw online data into refined insights. Whether you're monitoring competitors, optimizing product pages, or discovering market trends, TagX helps you stay agile and informed.

Their team of data engineers and domain experts ensures that each scraping task is accurate, efficient, and aligned with your business goals. Plus, their built-in analytics dashboards reduce the time from data collection to actionable decision-making.

Final Thoughts

E-commerce success today is tied directly to how well you understand and react to market data. With internet scraping, brands can unlock insights that drive pricing, inventory, customer satisfaction, and competitive advantage. Whether you're a startup or a scaled enterprise, the smart use of scraping technology can set you apart.

Ready to outsmart the competition? Partner with TagX to start scraping smarter.

0 notes

Text

Beyond the Books: Real-World Coding Projects for Aspiring Developers

One of the best colleges in Jaipur, which is Arya College of Engineering & I.T. They transitioning from theoretical learning to hands-on coding is a crucial step in a computer science education. Real-world projects bridge this gap, enabling students to apply classroom concepts, build portfolios, and develop industry-ready skills. Here are impactful project ideas across various domains that every computer science student should consider:

Web Development

Personal Portfolio Website: Design and deploy a website to showcase your skills, projects, and resume. This project teaches HTML, CSS, JavaScript, and optionally frameworks like React or Bootstrap, and helps you understand web hosting and deployment.

E-Commerce Platform: Build a basic online store with product listings, shopping carts, and payment integration. This project introduces backend development, database management, and user authentication.

Mobile App Development

Recipe Finder App: Develop a mobile app that lets users search for recipes based on ingredients they have. This project covers UI/UX design, API integration, and mobile programming languages like Java (Android) or Swift (iOS).

Personal Finance Tracker: Create an app to help users manage expenses, budgets, and savings, integrating features like OCR for receipt scanning.

Data Science and Analytics

Social Media Trends Analysis Tool: Analyze data from platforms like Twitter or Instagram to identify trends and visualize user behavior. This project involves data scraping, natural language processing, and data visualization.

Stock Market Prediction Tool: Use historical stock data and machine learning algorithms to predict future trends, applying regression, classification, and data visualization techniques.

Artificial Intelligence and Machine Learning

Face Detection System: Implement a system that recognizes faces in images or video streams using OpenCV and Python. This project explores computer vision and deep learning.

Spam Filtering: Build a model to classify messages as spam or not using natural language processing and machine learning.

Cybersecurity

Virtual Private Network (VPN): Develop a simple VPN to understand network protocols and encryption. This project enhances your knowledge of cybersecurity fundamentals and system administration.

Intrusion Detection System (IDS): Create a tool to monitor network traffic and detect suspicious activities, requiring network programming and data analysis skills.

Collaborative and Cloud-Based Applications

Real-Time Collaborative Code Editor: Build a web-based editor where multiple users can code together in real time, using technologies like WebSocket, React, Node.js, and MongoDB. This project demonstrates real-time synchronization and operational transformation.

IoT and Automation

Smart Home Automation System: Design a system to control home devices (lights, thermostats, cameras) remotely, integrating hardware, software, and cloud services.

Attendance System with Facial Recognition: Automate attendance tracking using facial recognition and deploy it with hardware like Raspberry Pi.

Other Noteworthy Projects

Chatbots: Develop conversational agents for customer support or entertainment, leveraging natural language processing and AI.

Weather Forecasting App: Create a user-friendly app displaying real-time weather data and forecasts, using APIs and data visualization.

Game Development: Build a simple 2D or 3D game using Unity or Unreal Engine to combine programming with creativity.

Tips for Maximizing Project Impact

Align With Interests: Choose projects that resonate with your career goals or personal passions for sustained motivation.

Emphasize Teamwork: Collaborate with peers to enhance communication and project management skills.

Focus on Real-World Problems: Address genuine challenges to make your projects more relevant and impressive to employers.

Document and Present: Maintain clear documentation and present your work effectively to demonstrate professionalism and technical depth.

Conclusion

Engaging in real-world projects is the cornerstone of a robust computer science education. These experiences not only reinforce theoretical knowledge but also cultivate practical abilities, creativity, and confidence, preparing students for the demands of the tech industry.

0 notes

Text

Introduction: The Evolution of Web Scraping

Traditional Web Scraping involves deploying scrapers on dedicated servers or local machines, using tools like Python, BeautifulSoup, and Selenium. While effective for small-scale tasks, these methods require constant monitoring, manual scaling, and significant infrastructure management. Developers often need to handle cron jobs, storage, IP rotation, and failover mechanisms themselves. Any sudden spike in demand could result in performance bottlenecks or downtime. As businesses grow, these challenges make traditional scraping harder to maintain. This is where new-age, cloud-based approaches like Serverless Web Scraping emerge as efficient alternatives, helping automate, scale, and streamline data extraction.

Challenges of Manual Scraper Deployment (Scaling, Infrastructure, Cost)

Manual scraper deployment comes with numerous operational challenges. Scaling scrapers to handle large datasets or traffic spikes requires robust infrastructure and resource allocation. Managing servers involves ongoing costs, including hosting, maintenance, load balancing, and monitoring. Additionally, handling failures, retries, and scheduling manually can lead to downtime or missed data. These issues slow down development and increase overhead. In contrast, Serverless Web Scraping removes the need for dedicated servers by running scraping tasks on platforms like AWS Lambda, Azure Functions, and Google Cloud Functions, offering auto-scaling and cost-efficiency on a pay-per-use model.

Introduction to Serverless Web Scraping as a Game-Changer

What is Serverless Web Scraping?

Serverless Web Scraping refers to the process of extracting data from websites using cloud-based, event-driven architecture, without the need to manage underlying servers. In cloud computing, "serverless" means the cloud provider automatically handles infrastructure scaling, provisioning, and resource allocation. This enables developers to focus purely on writing the logic of Data Collection, while the platform takes care of execution.

Popular Cloud Providers like AWS Lambda, Azure Functions, and Google Cloud Functions offer robust platforms for deploying these scraping tasks. Developers write small, stateless functions that are triggered by events such as HTTP requests, file uploads, or scheduled intervals—referred to as Scheduled Scraping and Event-Based Triggers. These functions are executed in isolated containers, providing secure, cost-effective, and on-demand scraping capabilities.

The core advantage is Lightweight Data Extraction. Instead of running a full scraper continuously on a server, serverless functions only execute when needed—making them highly efficient. Use cases include:

Scheduled Scraping (e.g., extracting prices every 6 hours)

Real-time scraping triggered by user queries

API-less extraction where data is not available via public APIs

These functionalities allow businesses to collect data at scale without investing in infrastructure or DevOps.

Key Benefits of Serverless Web Scraping

Scalability on Demand

One of the strongest advantages of Serverless Web Scraping is its ability to scale automatically. When using Cloud Providers like AWS Lambda, Azure Functions, or Google Cloud Functions, your scraping tasks can scale from a few requests to thousands instantly—without any manual intervention. For example, an e-commerce brand tracking product listings during flash sales can instantly scale their Data Collection tasks to accommodate massive price updates across multiple platforms in real time.

Cost-Effectiveness (Pay-as-You-Go Model)

Traditional Web Scraping involves paying for full-time servers, regardless of usage. With serverless solutions, you only pay for the time your code is running. This pay-as-you-go model significantly reduces costs, especially for intermittent scraping tasks. For instance, a marketing agency running weekly Scheduled Scraping to track keyword rankings or competitor ads will only be billed for those brief executions—making Serverless Web Scraping extremely budget-friendly.

Zero Server Maintenance

Server management can be tedious and resource-intensive, especially when deploying at scale. Serverless frameworks eliminate the need for provisioning, patching, or maintaining infrastructure. A developer scraping real estate listings no longer needs to manage server health or uptime. Instead, they focus solely on writing scraping logic, while Cloud Providers handle the backend processes, ensuring smooth, uninterrupted Lightweight Data Extraction.

Improved Reliability and Automation

Using Event-Based Triggers (like new data uploads, emails, or HTTP calls), serverless scraping functions can be scheduled or executed automatically based on specific events. This guarantees better uptime and reduces the likelihood of missing important updates. For example, Azure Functions can be triggered every time a CSV file is uploaded to the cloud, automating the Data Collection pipeline.

Environmentally Efficient

Traditional servers consume energy 24/7, regardless of activity. Serverless environments run functions only when needed, minimizing energy usage and environmental impact. This makes Serverless Web Scraping an eco-friendly option. Businesses concerned with sustainability can reduce their carbon footprint while efficiently extracting vital business intelligence.

Ideal Use Cases for Serverless Web Scraping

1. Market and Price Monitoring

Serverless Web Scraping enables retailers and analysts to monitor competitor prices in real-time using Scheduled Scraping or Event-Based Triggers.

Example:

A fashion retailer uses AWS Lambda to scrape competitor pricing data every 4 hours. This allows dynamic pricing updates without maintaining any servers, leading to a 30% improvement in pricing competitiveness and a 12% uplift in revenue.

2. E-commerce Product Data Collection

Collect structured product information (SKUs, availability, images, etc.) from multiple e-commerce platforms using Lightweight Data Extraction methods via serverless setups.

Example:

An online electronics aggregator uses Google Cloud Functions to scrape product specs and availability across 50+ vendors daily. By automating Data Collection, they reduce manual data entry costs by 80%.

3. Real-Time News and Sentiment Tracking

Use Web Scraping to monitor breaking news or updates relevant to your industry and feed it into dashboards or sentiment engines.

Example:

A fintech firm uses Azure Functions to scrape financial news from Bloomberg and CNBC every 5 minutes. The data is piped into a sentiment analysis engine, helping traders act faster based on market sentiment—cutting reaction time by 40%.

4. Social Media Trend Analysis

Track hashtags, mentions, and viral content in real time across platforms like Twitter, Instagram, or Reddit using Serverless Web Scraping.

Example:

A digital marketing agency leverages AWS Lambda to scrape trending hashtags and influencer posts during product launches. This real-time Data Collection enables live campaign adjustments, improving engagement by 25%.

5. Mobile App Backend Scraping Using Mobile App Scraping Services

Extract backend content and APIs from mobile apps using Mobile App Scraping Services hosted via Cloud Providers.

Example:

A food delivery startup uses Google Cloud Functions to scrape menu availability and pricing data from a competitor’s app every 15 minutes. This helps optimize their own platform in real-time, improving response speed and user satisfaction.

Technical Workflow of a Serverless Scraper

In this section, we’ll outline how a Lambda-based scraper works and how to integrate it with Web Scraping API Services and cloud triggers.

1. Step-by-Step on How a Typical Lambda-Based Scraper Functions

A Lambda-based scraper runs serverless functions that handle the data extraction process. Here’s a step-by-step workflow for a typical AWS Lambda-based scraper:

Step 1: Function Trigger

Lambda functions can be triggered by various events. Common triggers include API calls, file uploads, or scheduled intervals.

For example, a scraper function can be triggered by a cron job or a Scheduled Scraping event.

Example Lambda Trigger Code:

Lambda functionis triggered based on a schedule (using EventBridge or CloudWatch).

requests.getfetches the web page.

BeautifulSoupprocesses the HTML to extract relevant data.

Step 2: Data Collection

After triggering the Lambda function, the scraper fetches data from the targeted website. Data extraction logic is handled in the function using tools like BeautifulSoup or Selenium.

Step 3: Data Storage/Transmission

After collecting data, the scraper stores or transmits the results:

Save data to AWS S3 for storage.

Push data to an API for further processing.

Store results in a database like Amazon DynamoDB.

2. Integration with Web Scraping API Services

Lambda can be used to call external Web Scraping API Services to handle more complex scraping tasks, such as bypassing captchas, managing proxies, and rotating IPs.

For instance, if you're using a service like ScrapingBee or ScraperAPI, the Lambda function can make an API call to fetch data.

Example: Integrating Web Scraping API Services

In this case, ScrapingBee handles the web scraping complexities, and Lambda simply calls their API.

3. Using Cloud Triggers and Events

Lambda functions can be triggered in multiple ways based on events. Here are some examples of triggers used in Serverless Web Scraping:

Scheduled Scraping (Cron Jobs Cron Jobs):

You can use AWS EventBridge or CloudWatch Events to schedule your Lambda function to run at specific intervals (e.g., every hour, daily, or weekly).

Example: CloudWatch Event Rule (cron job) for Scheduled Scraping:

This will trigger the Lambda function to scrape a webpage every hour.

File Upload Trigger (Event-Based):

Lambda can be triggered by file uploads in S3. For example, after scraping, if the data is saved as a file, the file upload in S3 can trigger another Lambda function for processing.

Example: Trigger Lambda on S3 File Upload:

By leveraging Serverless Web Scraping using AWS Lambda, you can easily scale your web scraping tasks with Event-Based Triggers such as Scheduled Scraping, API calls, or file uploads. This approach ensures that you avoid the complexity of infrastructure management while still benefiting from scalable, automated data collection. Learn More

#LightweightDataExtraction#AutomatedDataExtraction#StreamlineDataExtraction#ServerlessWebScraping#DataMining

0 notes